Planar Object Tracking Benchmark in the Wild

Abstract

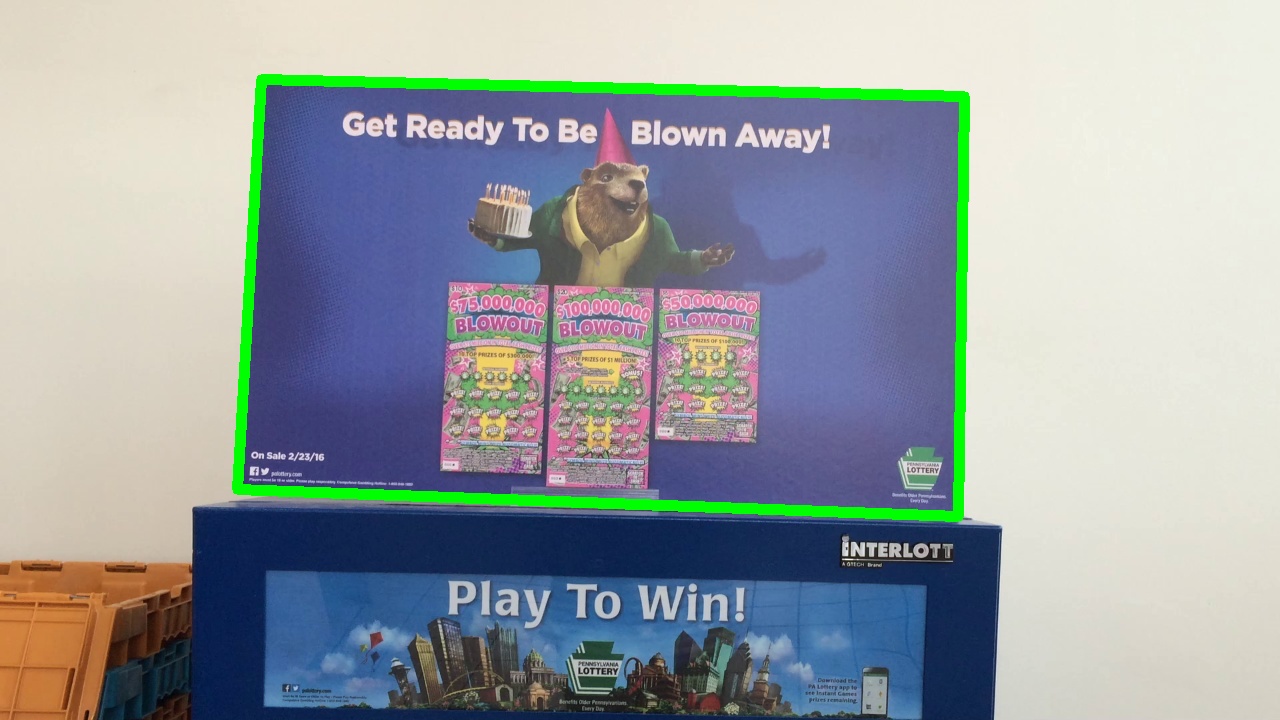

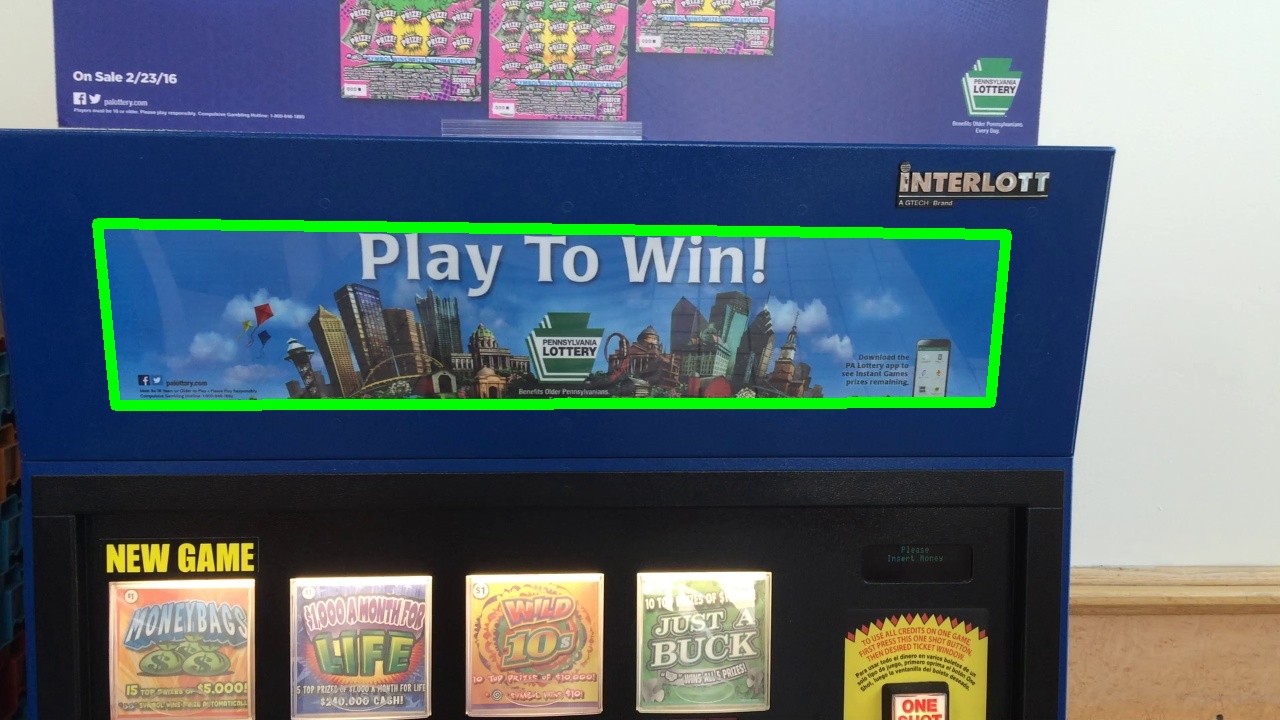

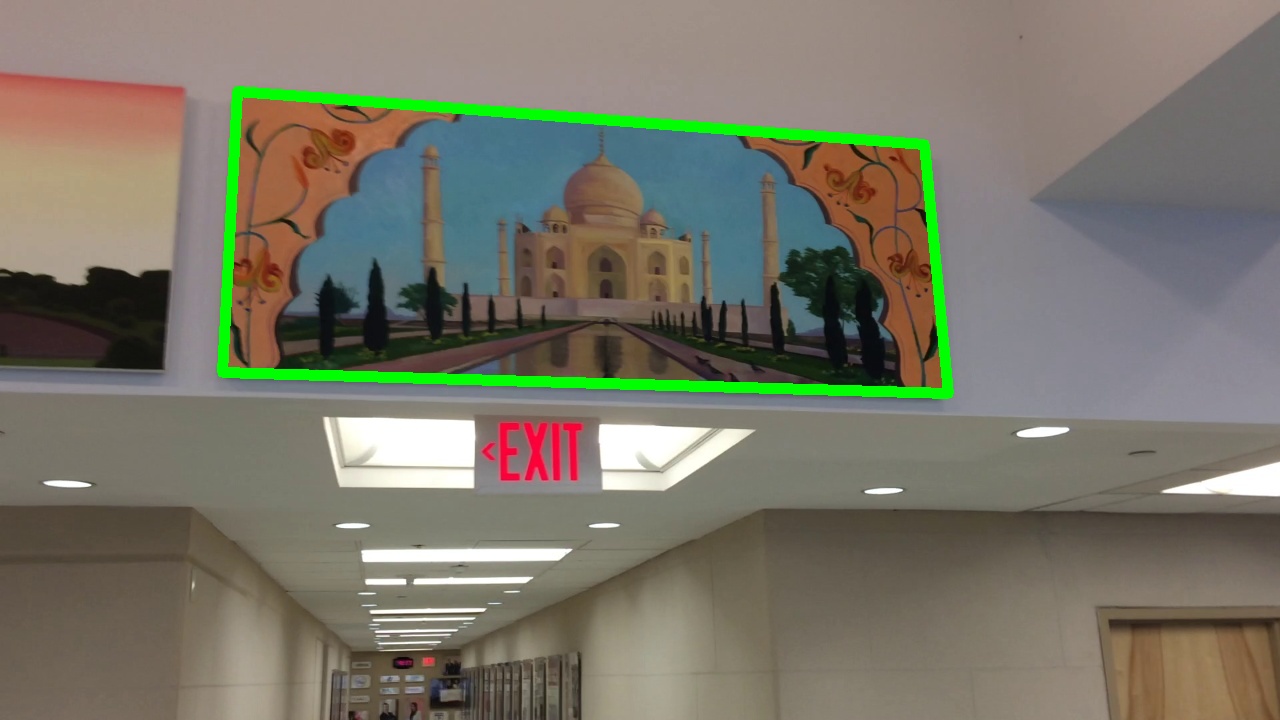

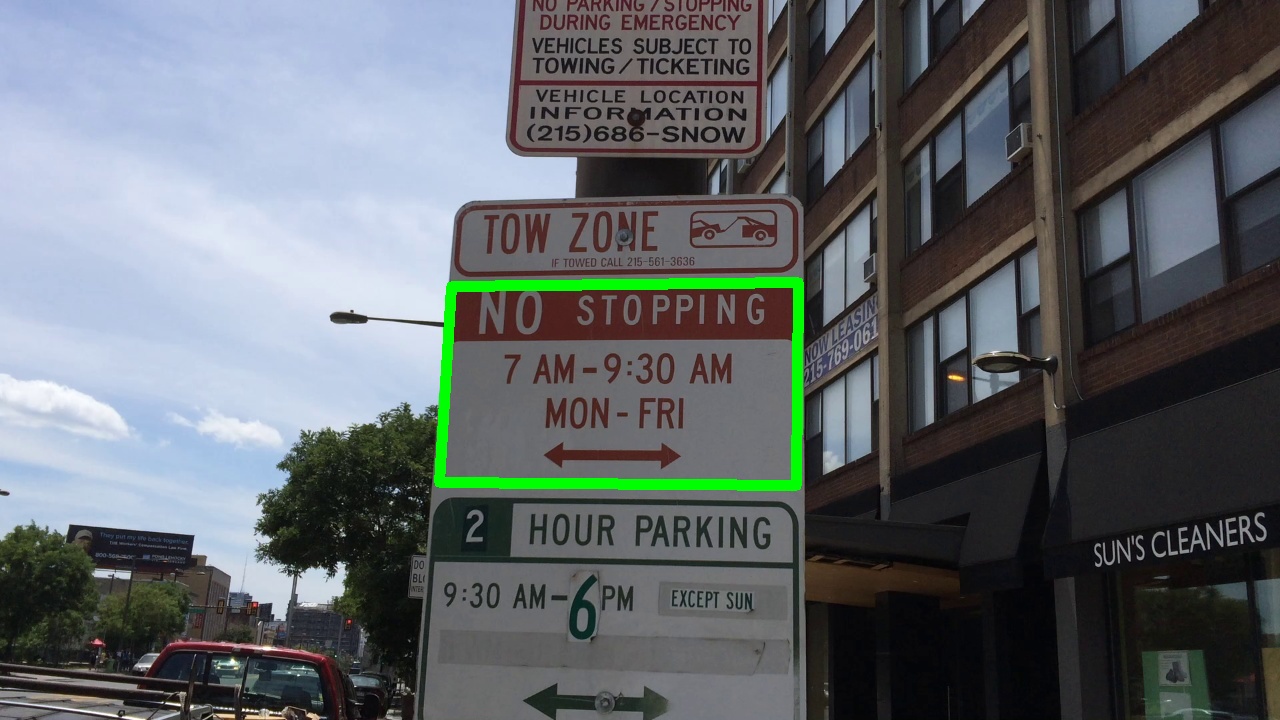

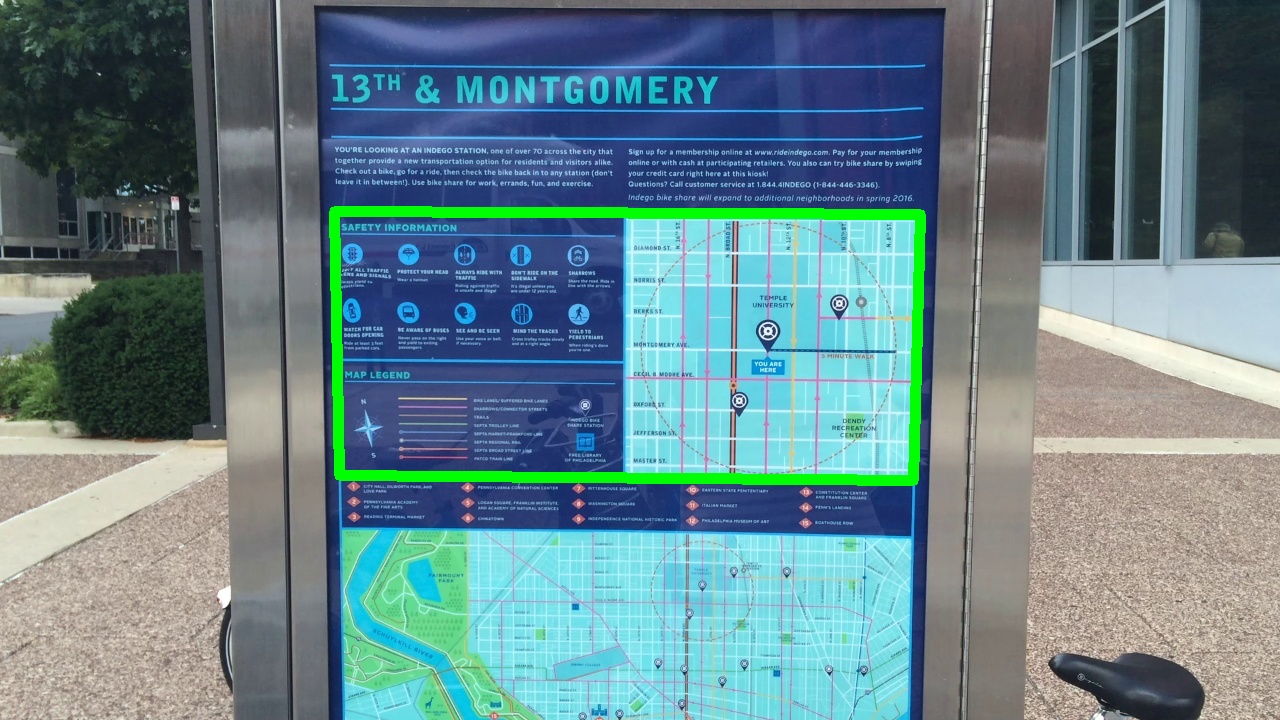

Planar object tracking is an important problem in vision-based robotic systems. Several benchmarks have been constructed to evaluate the tracking algorithms. However, these benchmarks are built in constrained laboratory environments and there is a lack of video sequences captured in the wild to investigate the effectiveness of trackers in practical applications. In this paper, we present a carefully designed planar object tracking benchmark containing 280 videos of 40 planar objects sampled in the natural environment. In particular, for each object, we shoot seven videos involving various challenging factors, namely scale change, rotation, perspective distortion, motion blur, occlusion, out-of-view, and unconstrained. In addition, we design a semi-manual approach to annotate the ground truth with high quality. Moreover, 22 representative algorithms are evaluated on the benchmark using two evaluation metrics. Detailed analysis of the evaluation results is also presented to provide guidance on designing algorithms working in real-world scenarios. We expect that the proposed benchmark would benefit future studies on planar object tracking.Reference

-

Planar Object Tracking Benchmark in the Wild

P. Liang, H. Ji, Y. Wu, Y. Chai, L. Wang, C. Liao, H. Ling.

Neurocomputing, 454:254-267, 2021 (A preliminary version appears in ICRA 2018)

Dataset

All the 280 sequences of the dataset can be downloaded separately for each object using the following Google Drive links. The entire data set is available at Baidu Pan with extraction code "xaou". The groud truth is available at download.Evaluation

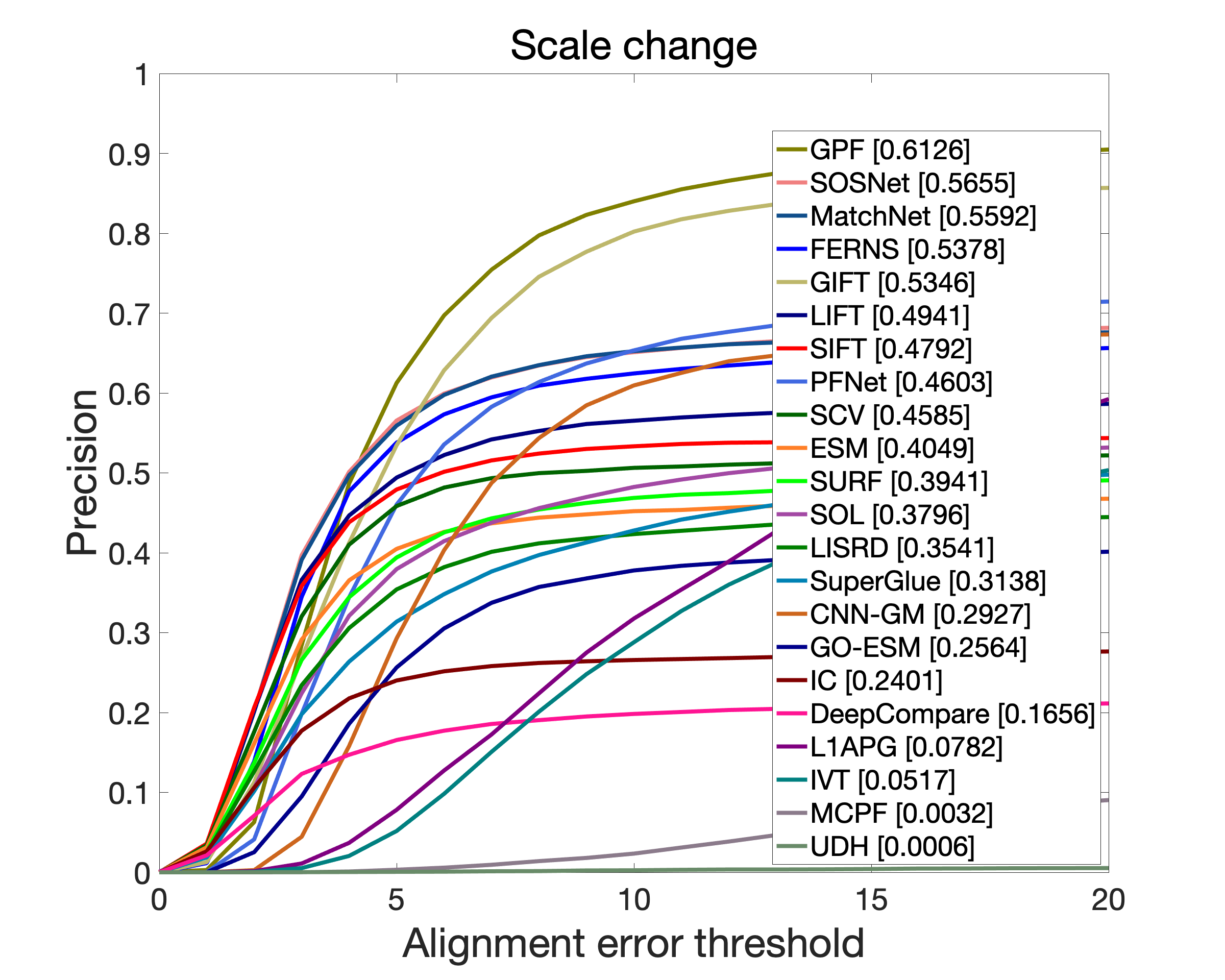

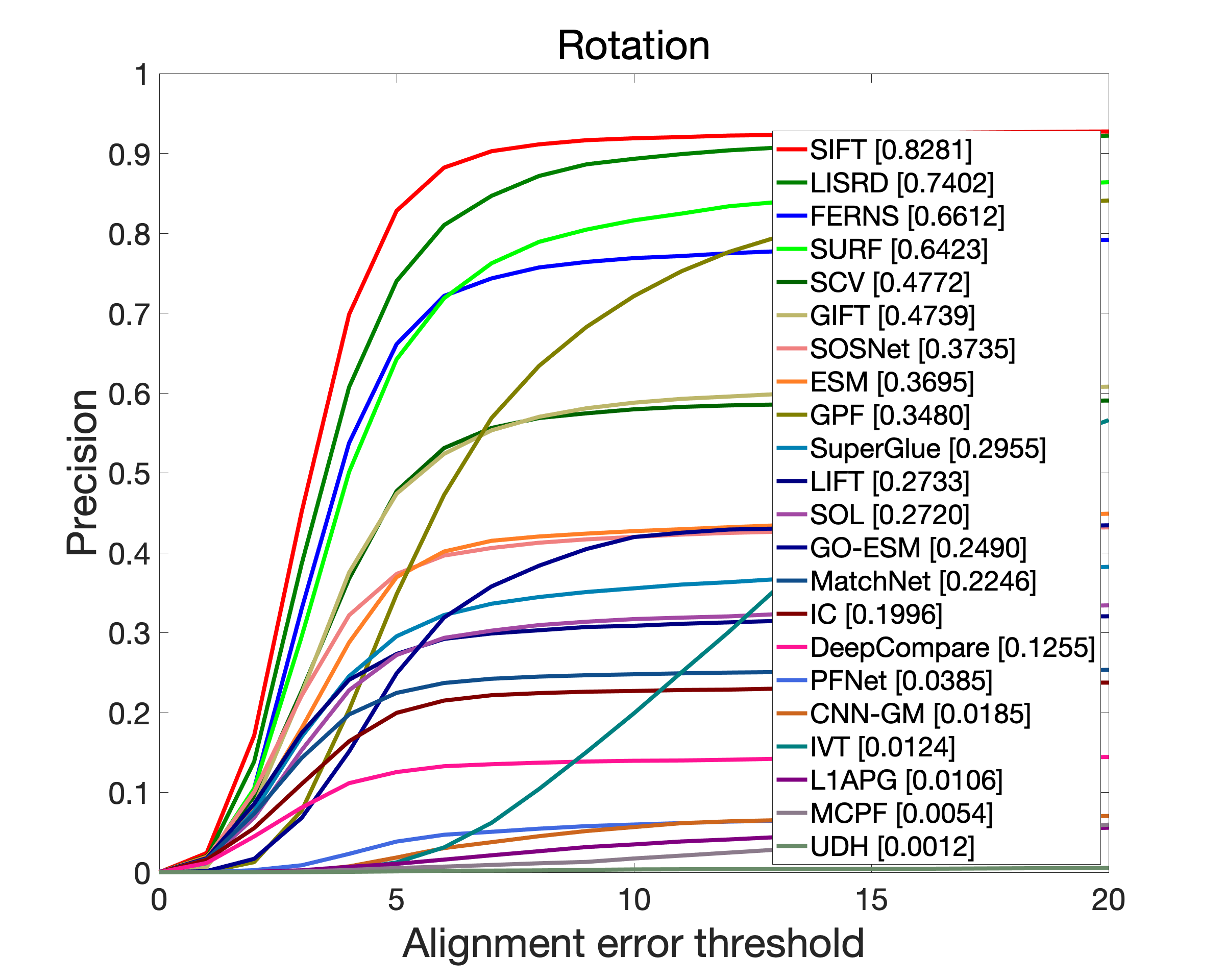

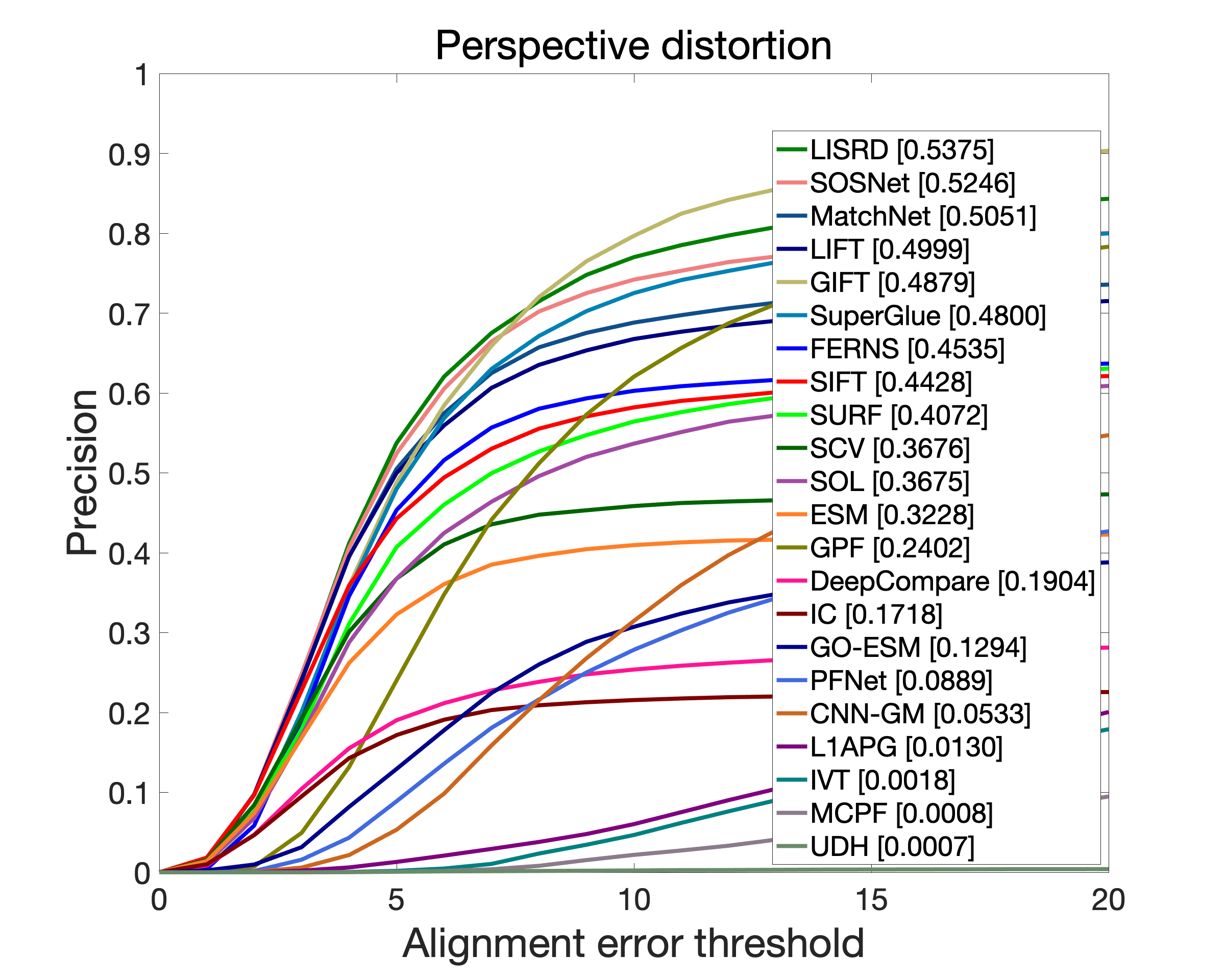

All the evaluation results and the Matlab code for generating the following figures are available at Google Drive or Baidu Pan with extraction code "sioc".

|

|

|

|

|

|

|

|

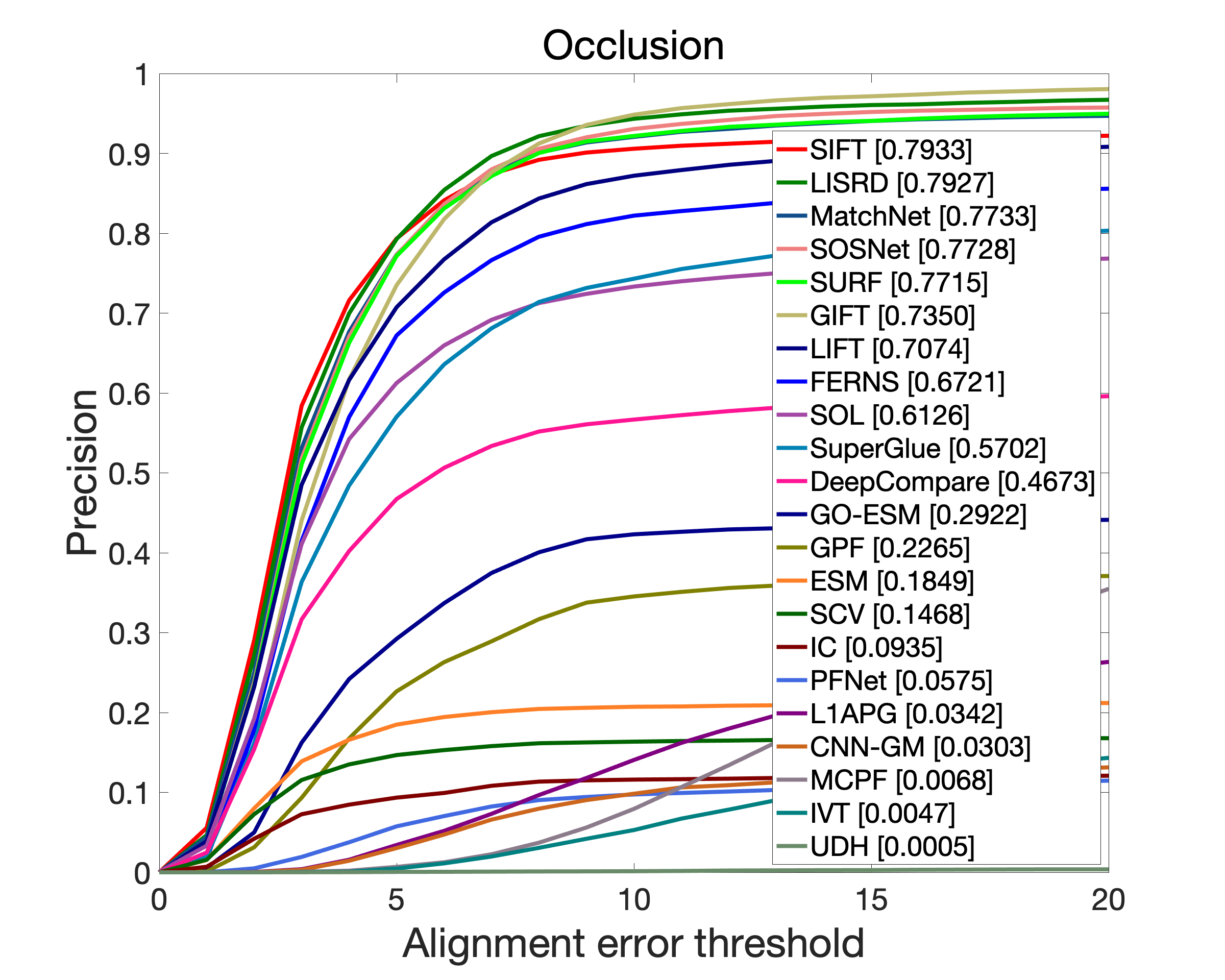

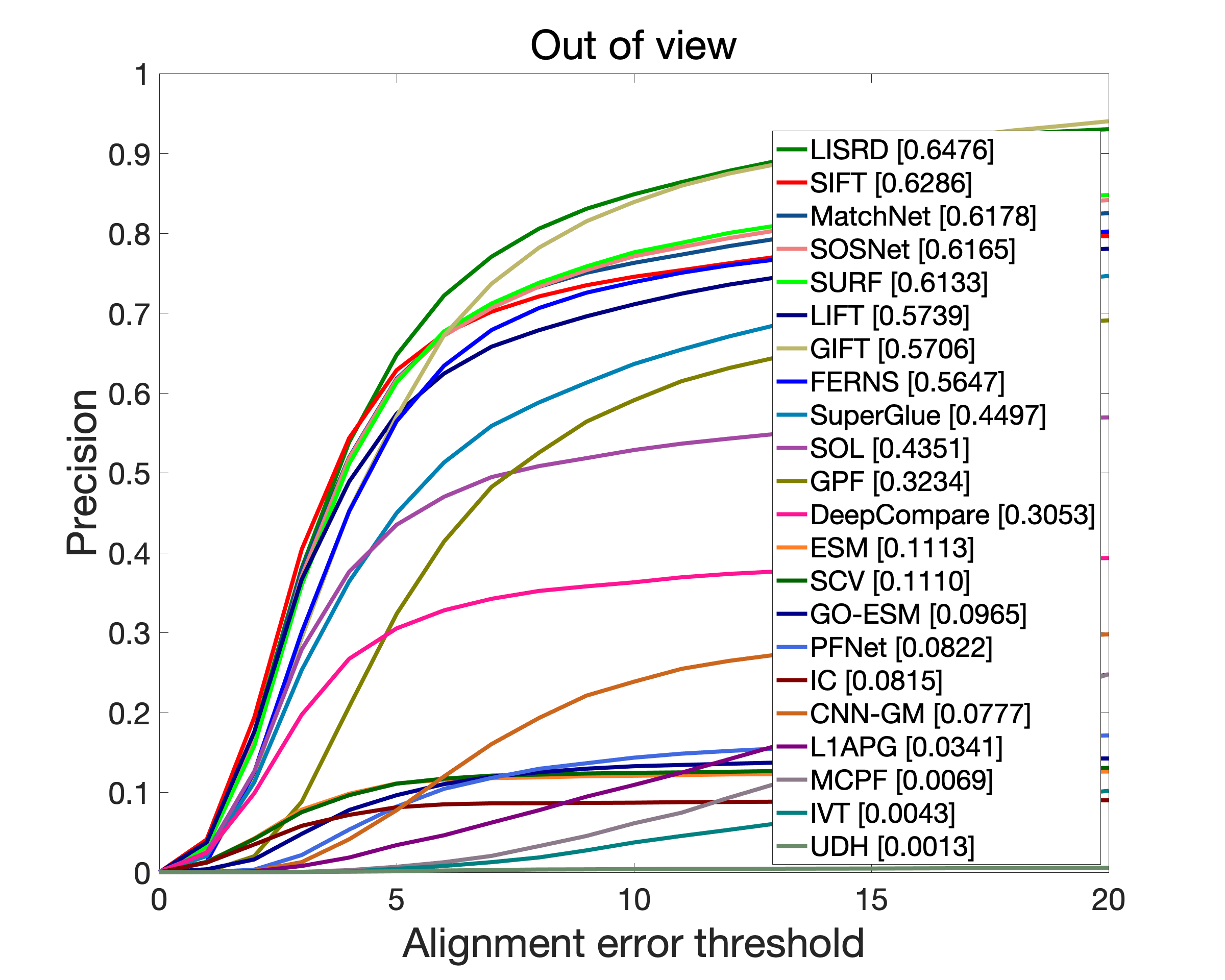

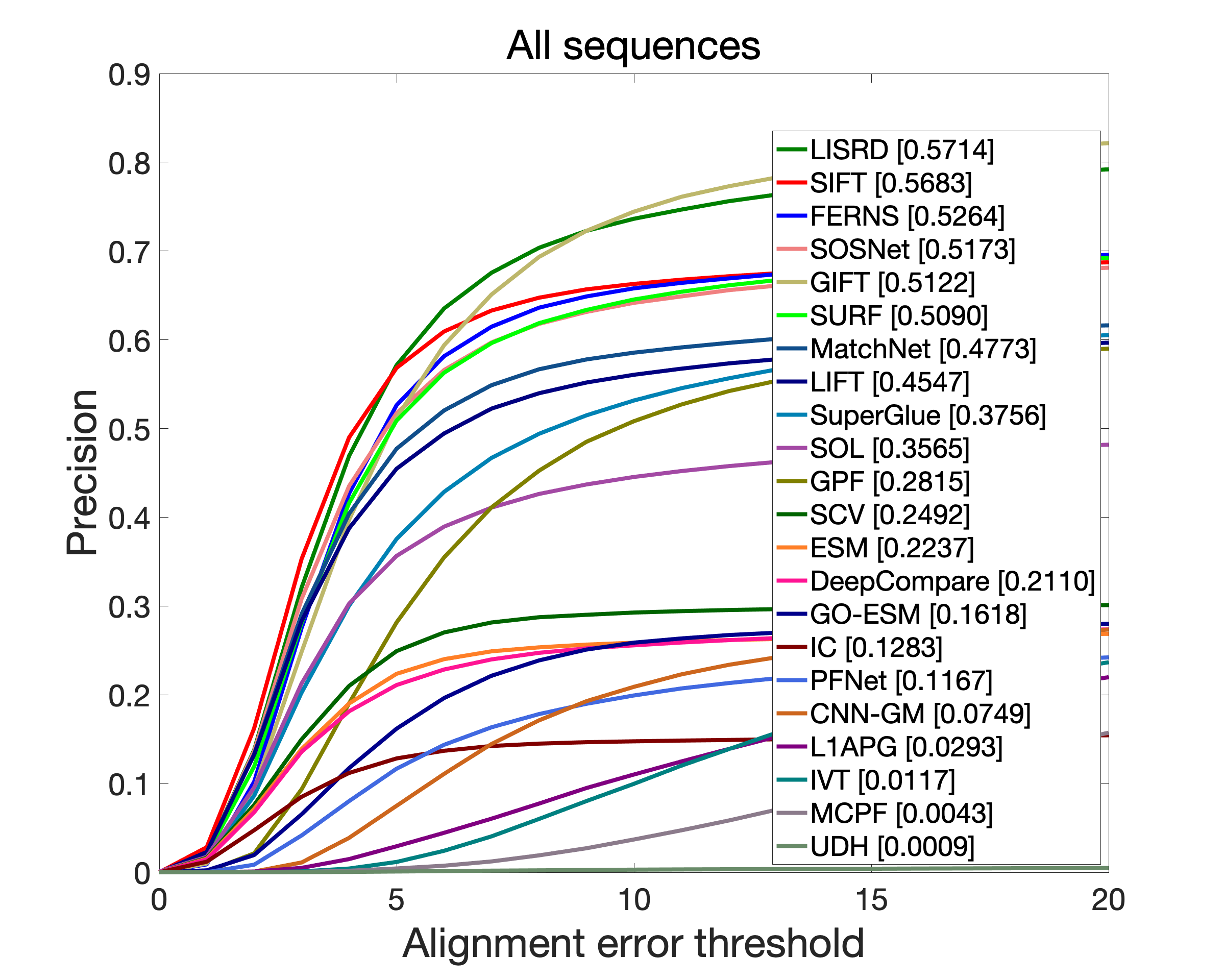

Fig. 1 Comparison of evaluated trackers using precision plots. The precision at the threshold tp=5 is used as a representative score.

| Tracker | Reference |

| CNN-GM | I. Rocco, R. Arandjelovic, and J. Sivic, “Convolutional neural network architecture for geometric matching,” PAMI, 2018. |

| DeepCompare | S. Zagoruyko and N. Komodakis, “Learning to compare image patches via convolutional neural networks,” CVPR, 2015. |

| ESM | S. Benhimane and E. Malis, "Real-time image-based tracking of planes using efficient second-order minimization," IROS, 2004. |

| FERNS | M. Ozuysal, M. Calonder, V. Lepetit, and P. Fua, "Fast keypoint recognition using random ferns," PAMI, 2010. |

| GIFT | Y. Liu, Z. Shen, Z. Lin, S. Peng, H. Bao, X. Zhou, "GIFT: learning transformation-invariant dense visual descriptors via group cnns," NeurIPS , 2019 |

| GO-ESM | L. Chen, F. Zhou, Y. Shen, X. Tian, H. Ling, and Y. Chen, "Illumination insensitive efficient second-order minimization for planar object tracking," ICRA, 2017 |

| GPF | J. Kwon, H. S. Lee, F. C. Park, and K. M. Lee, "A geometric particle filter for template-based visual tracking," PAMI 2014 |

| IC | S. Baker and I. Matthews, "Lucas-kanade 20 years on: A unifying framework," IJCV 2004 |

| IVT | D. A. Ross, J. Lim, R.-S. Lin, and M.-H. Yang, "Incremental learning for robust visual tracking," IJCV 2008 |

| LIFT | K. M. Yi, E. Trulls, V. Lepetit, and P. Fua, “Lift: Learned invariant feature transform,” ECCV, 2016. |

| LISRD | R. Pautrat, V. Larsson, M. R. Oswald, M. Pollefeys, “Online invariance selection for local feature descriptors,” ECCV, 2020. |

| L1APG | C. Bao, Y. Wu, H. Ling, and H. Ji, "Real time robust l1 tracker using accelerated proximal gradient approach," CVPR 2012 |

| MatchNet | X. Han, T. Leung, Y. Jia, R. Sukthankar, and A. C. Berg, “Matchnet: Unifying feature and metric learning for patch-based matching,” CVPR 2015. |

| MCPF | T. Zhang, C. Xu, and M.-H. Yang, “Learning multi-task correlation particle filters for visual tracking,” PAMI 2018. |

| PFNet | R. Zeng, S. Denman, S. Sridharan, C. Fookes, “Rethinking planar homography estimation using perspective fields,” ACCV, 2018. |

| SCV | R. Richa, R. Sznitman, R. Taylor, and G. Hager, “Visual tracking using the sum of conditional variance,” IROS, 2011. |

| SIFT | D. G. Lowe, "Distinctive image features from scale-invariant keypoints," IJCV 2004 |

| SOL | S. Hare, A. Saffari, and P. H. Torr, “Efficient online structured output learning for keypoint-based object tracking,” CVPR, 2012. |

| SOSNet | Y. Tian, X. Yu, B. Fan, F. Wu, H. Heijnen, and V. Balntas, “Sosnet: Second order similarity regularization for local descriptor learning,” CVPR, 2019. |

| SuperGlue | P.-E. Sarlin, D. DeTone, T. Malisiewicz, and A. Rabinovich, “Superglue: Learning feature matching with graph neural networks,” CVPR 2020 |

| SURF | H. Bay, A. Ess, T. Tuytelaars, and L. Van Gool, "Speeded-up robust features (surf)," CVIU 2008 |

| UDH | T. Nguyen, S. W. Chen, S. S. Shivakumar, C. J. Taylor, and V. Kumar, “Unsupervised deep homography: A fast and robust homography estimation model,” RAL, 2018. |